Problem

For visual surveillance tracking, to separate multiple targets when they are mutually occluded in the view of a single camera is extremely challenging.

Now that multiple cameras can provide different perspectives, how to localize the targets on the 3D ground plane in a network of cameras becomes an important issue.

Previous methods usually solve the localization problem iteratively based on background subtraction results, yet high-level image information is neglected.

In order to fully exploit the image information and thus to gain superior localization precision, we suggest incorporating human detection into surveillance system.

We develop a novel formulation combining human detection and background subtraction result to localize targets on the ground plane and solve it by convex optimization.

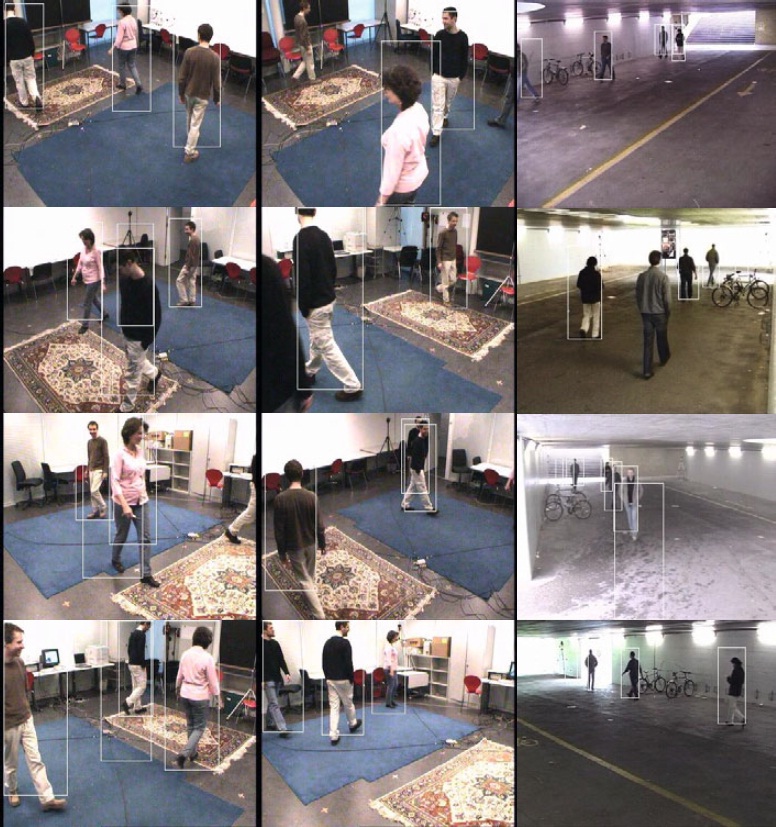

Figure The overview of our method. The first step is to train a human detector and then create a map PHUMc. Secondly, foreground regions are used in generating the map PBSc. Finally, these two kinds of information are integrated into an objective function of true occupancy map, and the solution is obtained by convex optimization.

Result

We evaluate our method on three public datasets, namely, 6p sequence, Terrace sequence, and Passageway sequence.

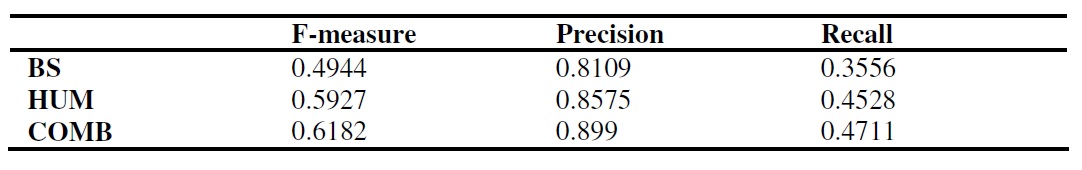

Each sequence contains four views and ground truth map. First, we test our method on Terrace sequence and show that fusion the maps generate by human detection and background

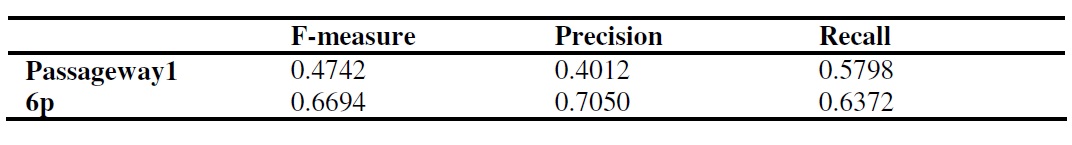

subtraction can improve the localization result over using a single map respectively. We also show the results on other two datasets.

Table 1. Compare our fusion method on Terrace sequence to using human detection and background subtraction respectively

Table 2. Evaluation of our method on 6p sequence and Passageway sequence

Some visualization results. The boxes are rendered by back-projecting cubes, which height are 1.75m, located on 3D ground plane.